Effective work item management is crucial for any software development project. Azure DevOps Service, a powerful suite of tools provided by Microsoft, offers a robust solution for managing work items throughout the development lifecycle. In this blog post, we will explore the key features of Azure DevOps and provide practical tips for optimizing work item management. From creating and tracking work items to leveraging automation and collaboration, this guide will help you streamline your development process and enhance productivity. Let’s dive in!

Section 1: Understanding Azure DevOps

Azure DevOps is a cloud-based platform that provides end-to-end software development tools, enabling teams to plan, develop, test, and deliver software efficiently. Its work item management capabilities are centred around three key elements: work items, boards, and backlogs.

# Work items: Work items represent tasks, issues, or requirements within a project. They can be customized to suit your team’s needs, with various types such as user stories, bugs, tasks, and more.

# Boards: Boards in Azure DevOps offer a visual representation of work items. You can create customizable Kanban boards, Scrum boards, or task boards to track the progress of work items and gain visibility into the development process.

# Backlogs: Backlogs provide a prioritized list of work items that need to be completed. They serve as a central repository for capturing and managing requirements, allowing teams to plan their work and schedule iterations effectively.

Section 2: Creating and Tracking Work Items

To effectively manage work items with Azure DevOps, follow these best practices:

# Clear item descriptions: Ensure work items have concise and descriptive titles and descriptions. This helps team members understand the task at hand and prevents ambiguity.

# Categorization: Use appropriate tags, areas, and iterations to categorize work items. This enables easier searching, filtering, and reporting, making it simpler to find and prioritize tasks.

# Establish relationships: Utilize parent-child relationships between work items to represent dependencies or hierarchies. This enables tracking progress at both micro and macro levels, enhancing transparency.

# Assigning and tracking progress: Assign work items to team members and set appropriate effort estimates. Regularly update the status and progress of work items to keep everyone informed and identify potential bottlenecks.

Section 3: Automation and Collaboration

Azure DevOps offers several automation and collaboration features that can streamline work item management:

# Automated workflows: Utilize Azure Pipelines to automate the creation and tracking of work items. For example, you can configure triggers to automatically create a bug work item when a test case fails.

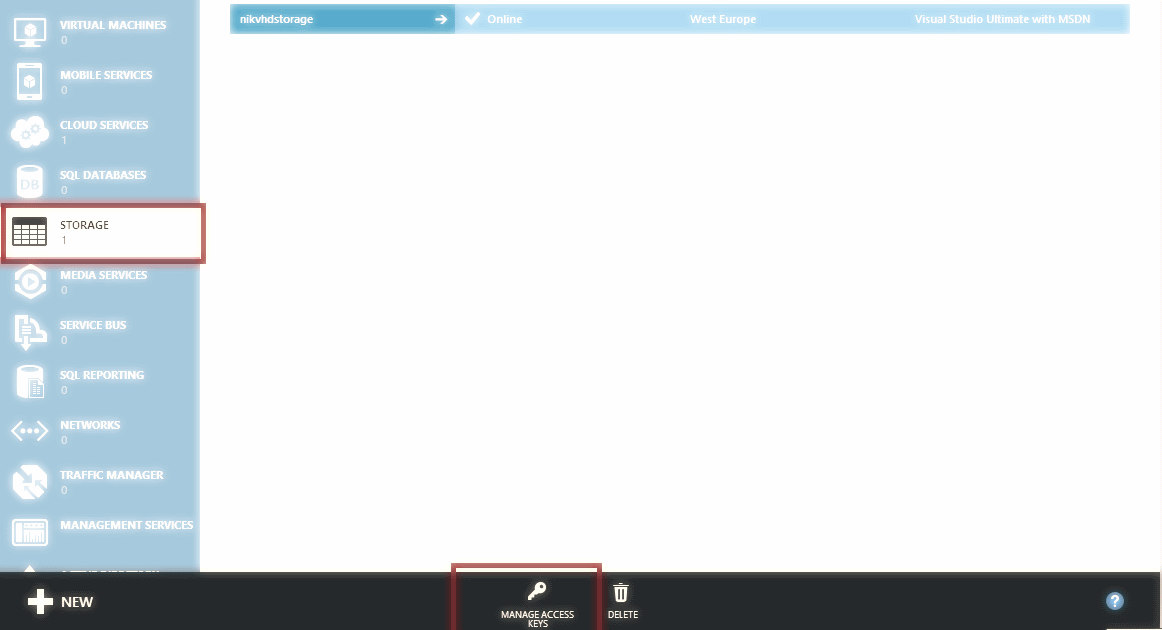

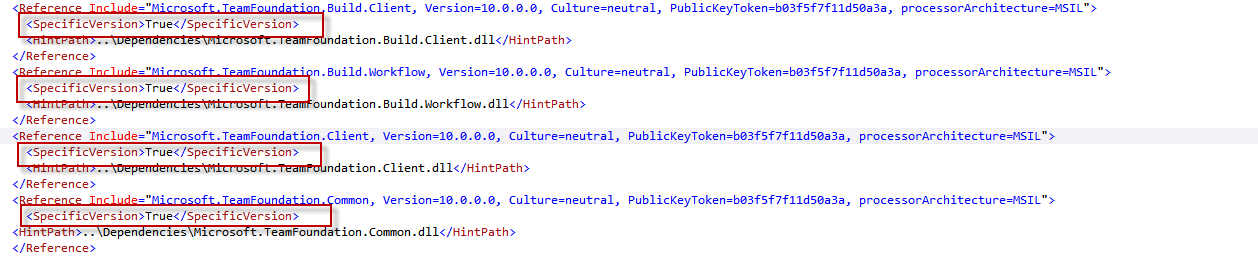

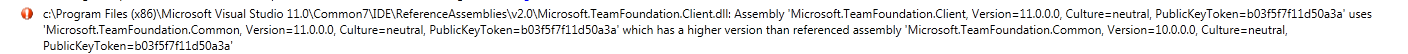

# Integrations: Leverage integrations with popular development tools such as Visual Studio, GitHub, and Jenkins. These integrations allow seamless synchronization of work items, enabling teams to work in their preferred environments.

# Notifications: Configure notifications to keep team members informed about changes to work items. Azure DevOps provides flexible notification settings, allowing users to receive updates via email, Teams, or other channels.

# Real-time collaboration: Azure DevOps supports real-time collaboration, enabling team members to discuss and resolve issues directly within work items. This promotes effective communication and reduces delays caused by back-and-forth conversations.

Section 4: Reporting and Analytics

Azure DevOps provides powerful reporting and analytics capabilities to track project progress and identify areas for improvement:

# Dashboards: Create customized dashboards to display key metrics and charts related to work item management. This allows stakeholders to visualize the progress of work items and make data-driven decisions.

# Query and charting tools: Use Azure DevOps query and charting tools to slice and dice data, analyze trends, and identify bottlenecks or areas requiring attention.

# Burndown charts: Burndown charts provide a visual representation of the work remaining versus time, allowing teams to track progress and adjust their plans accordingly.

Conclusion

Efficient work item management is vital for successful software development projects. With Azure DevOps, you have a powerful suite of tools at your disposal to streamline work item creation, tracking, automation, collaboration, and reporting. By following the best practices outlined in this guide, you can enhance productivity, foster effective collaboration, and deliver high-quality software on time. Start leveraging Azure DevOps with Almo and take your work item management to the next level. Happy coding!